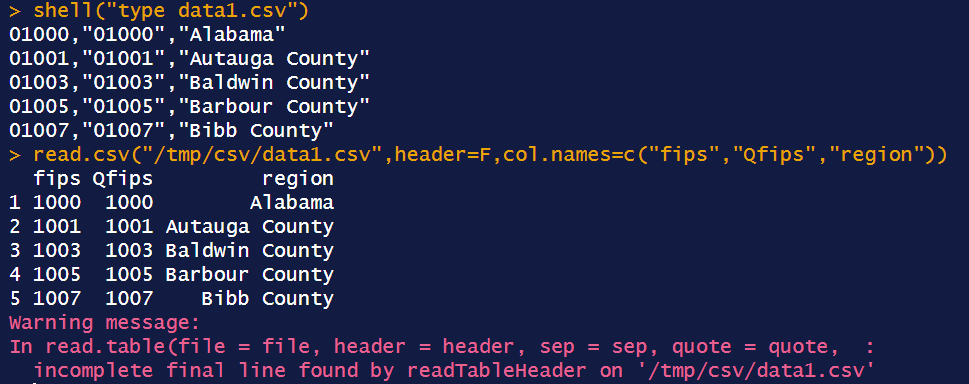

R and CSV files

When I deal with regional codes such as FIPS[1] and HUC[2], CSV file readers often mutilate my regions. Here is an example in R:

The leading zeros of the 5-digit FIPS codes are gone, as the CSV reader interpreted them as integers. This type conversion is applied regardless of whether the column is quoted. To be sure, I have an unquoted and quoted column in my data set. In the end, quoting does not make a difference in our experiments. Obviously, these FIPS codes were never integers: writing integers doesn’t lead to leading zeros. I.e., they are strings and should be parsed like that.

The warning message can be fixed by inserting a newline after the last line. Under Windows, that newline would be the two characters CR-LF (Carriage Return + Linefeed). This is R, so we can also eliminate the warning message by changing the first line! Predictability is just boring.

We can fix the most important problem — don’t mutilate my data — using the colClasses option.

However, I am more interested in default behavior here. Why? I receive a lot of data sets (including derived data such as shape files) with damaged region codes, so this seems to be a more structural problem. There is good reason to believe this is caused by using CSV files somewhere along the workflow. This is certainly a big issue when using data from different sources, some of which have correct region codes and others don’t. I have seen this issue lingering for a long time and re-occurring. It is painful to see how these stupid, mundane problems can have surprisingly long-term effects.

Codes like these should be stored as strings, not only to preserve leading zeros but also because numeric operations (addition, multiplication, etc.) have no meaning here. In general, ids (identifiers) should be stored as strings.

Excel and CSV files

Excel is doing a little bit better. When opening the CSV file with Excel, it doesn’t truncate data without notice but gives a proper warning:

When reading into Excel’s data model (a.k.a. PowerQuery), we need to remove the Changed Type step:

|

| After Changed Type step |

|

| Dropping Changed Type step |

This is better than nothing, but my preference would be that the tools just would do the right thing.

Python

The Pandas CSV reader is ruthless and drops leading zeros without a peep.

An easy fix is to use the dtype option: pandas.read_csv(R”\tmp\csv\data2.csv”,dtype=str).

Polars (a dataframe package) does the same:

The duckdb embedded database [3] is doing much better:

Here 5-digit codes stay 5-digit codes. Duckdb infers from the leading zeros that this is not an integer. Note that duckdb is also available under R.

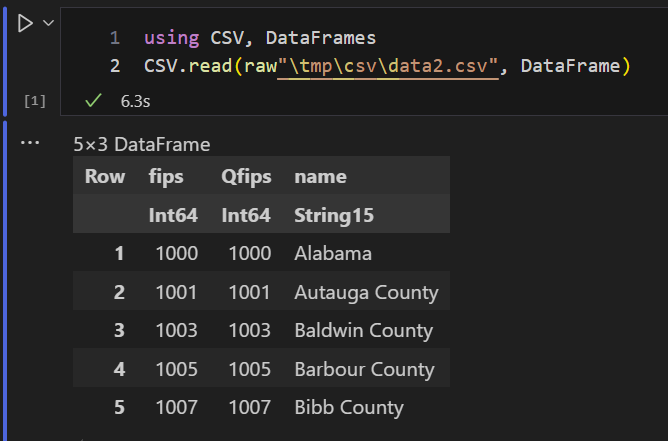

Julia

Julia has no mercy for my data and drops leading digits without any warning.

SQLite

SQLite is handling leading zeros correctly.

ClickHouse

The ClickHouse database has a different convention: the unquoted column is read as an integer while the quoted column is a string:

I expected more cases where the behavior depended on the presence of quotation marks. After all, quotations are needed when a comma or newline is part of the column. That is only the case for string columns. But as we see, chdb is the only database that follows this logic.

Interestingly, chdb is not ported to Windows. Apparently, there is no demand for that. Here I run it in WSL (Windows Subsystem for Linux).

Summary

| Behavior | CSV Readers |

|---|---|

| Type conversion to integer | R, Pandas, Polars, Julia, PowerQuery |

| Read as string | SQLite, Duckdb |

| Depends on quotes | ClickHouse |

| Warning | Excel |

Conclusion

CSV readers don’t agree on how to process our example CSV file, which contains 5-digit FIPS codes.

The database systems SQLite and duckdb read this in correctly: that is as strings. Other tools: Excel, R, and Pandas, are confused and perform a damaging type conversion.

From this simple example, we can conclude that computing is really still in the Stone Age. CSV readers are happily ignoring leading zeros.

References

- FIPS County Code, https://en.wikipedia.org/wiki/FIPS_county_code

- Hydrologic Units Maps, https://water.usgs.gov/GIS/huc.html

- https://duckdb.org/